In the realm of digital marketing, achieving successful campaigns hinges on one critical factor: data quality.

Did you know that a staggering 80% of AI pilots fail due to fragile data foundations? Misguided decisions based on unreliable data can starkly undermine your marketing efforts. In this blog, we will dive deep into the data quality issues that plague organizations and explore how Agentic AI can turn broken data signals into actionable insights.

The Hidden Threat of Broken Data

Behind every underperforming campaign and misleading dashboard lies a silent culprit: broken data.

It doesn’t announce itself with alarms. Instead, it creeps in quietly:

- A missing conversion tag here.

- A mislabeled event there.

- An integration that silently fails overnight.

By the time teams realize something’s wrong, weeks or even months of decisions have already been made on a shaky foundation.

Leaders frequently attribute poor performance to strategy missteps, implementation flaws, or even technological inadequacies. However, the core issue often lies upstream – the way data is gathered, validated, and transmitted. When data inputs fail, the entire system collapses, leading to missed opportunities and misallocated resources.

The Importance of Data Quality in Marketing

Data quality isn’t just about clean spreadsheets – it’s the foundation of business growth and decision-making. When it breaks down, entire strategies falter.

-

Gartner reports that poor data quality costs organizations an average of $12.9 million annually, draining resources that could otherwise fuel innovation and growth.

-

A Monte Carlo Data Quality Survey, found that businesses lose over 30% of their revenue due to poor data quality – a figure that highlights how broken signals directly impact the bottom line.

-

Analysts consistently warn that many data and analytics initiatives fail not because of strategy or execution, but because they are built on fragile, unreliable inputs.

The takeaway? Poor data quality isn’t a back-office problem. It’s a growth blocker that erodes trust, wastes budgets, and limits the ability of AI and analytics to deliver reliable insights.

So the critical question becomes: Where exactly does data break down in the collection process and how can Agentic AI solve it?

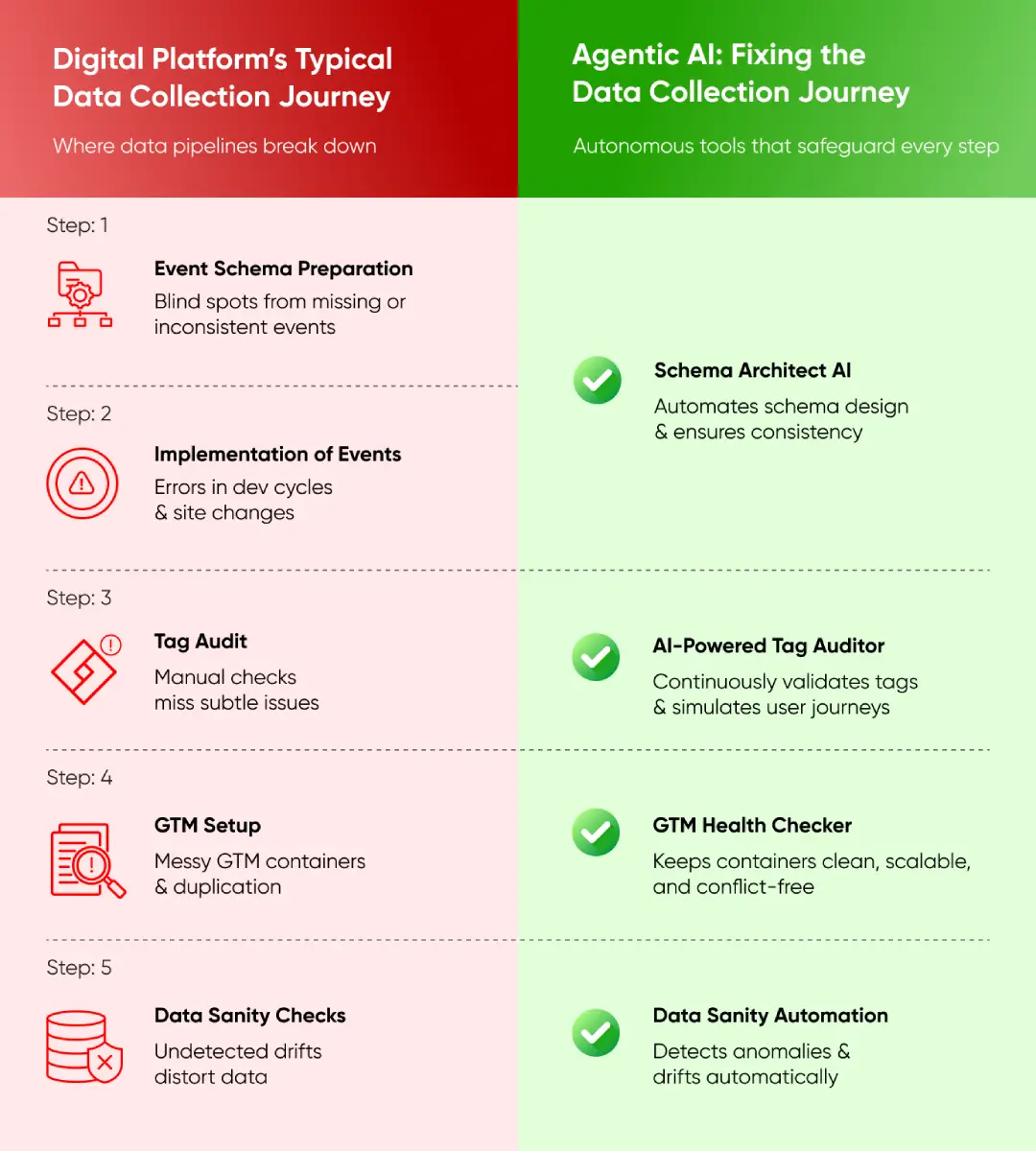

The Digital Platforms’ Data Collection Journey

Every organization today runs on a pipeline of customer and operational data. On the surface, it seems straightforward. But in reality, it’s a multi-step relay, where a dropped signal at any stage can compromise the entire system.

Here’s how the data journey typically unfolds:

1. Event Schema Preparation

The schema is the global master blueprint for measurement. It determines what user behavior (page views, sign-ups, purchases) is worth measuring, what parameters to record, and how to normalize naming conventions across platforms.

If events are misconfigured or tags are missing, the entire downstream system is compromised.

Where it breaks:

- Missing or misconfigured tags create blind spots.

- The same event is labeled differently across tools, fragmenting data.

- Developers push updates without QA, introducing silent breaks.

2. Implementation of events

Developers build the data layers based on the schema. This is the all-important translation phase – converting the blueprint into live data signals that can be collected with analytics tools. The schema outlines what to track (events, parameters, user actions), and developers put this into the site or app code.

Where it breaks:

- API updates that change field names but aren’t reflected in the connector.

- Events failing to sync without immediate visibility.

- Ad platform integrations sending incomplete or delayed data.

3. Tag Audit

After deployment, tags must be checked against the schema to ensure they are firing appropriately and at the correct times. Tag audits happen where data is gathered — when events exit the browser/app and enter analytics platforms.

Where it breaks:

- Schema drift (categories change over time, leaving old ones behind).

- Duplicate or incomplete records.

- Metrics that don’t reconcile between warehouse and BI tools.

Over time, these inconsistencies create systemic blind spots, making reporting untrustworthy.

4. GTM Setup

Google Tag Manager is the orchestration center where tags, triggers, and variables are controlled. Events are also set inside the GTM container here, enabling disciplined tracking. A tidy, modular GTM setup simplifies updates and scaling.

Where it breaks:

- Validation is skipped due to lack of bandwidth.

- Checks are conducted reactively (only when something is clearly broken).

- By the time issues are detected, budgets have already been wasted.

5. Data Sanity Checks

After data is persisted in GA4, sanity checks ensure that what’s being captured is expected. These involve audits of event volumes, parameter distributions, and anomalies over dimensions.

Where it breaks:

- Campaigns optimized against faulty signals.

- Budgets wasted due to unreliable attribution.

- Stakeholder trust erodes when reports don’t align with reality.

Where It Breaks: The Silent Culprits of Data Quality Problems

Broken data doesn’t usually come from one catastrophic failure. Instead, it’s the cumulative effect of small, invisible cracks:

- Tagging & Event Setup → Missing tags, mislabeled events, rushed deployments.

- Data Transfer → API mismatches, failed syncs, incomplete ad platform integrations.

- Data Storage → Schema drift, duplicates, unreconciled metrics.

- Validation → Sporadic, manual, skipped entirely when resources are stretched.

- Reporting → Dashboards aggregating incomplete or delayed signals.

By the time reporting surfaces these cracks, it’s already too late. Decisions have been made. ROI has leaked. Trust is lost.

According to Accenture, 63% of companies using AI are still “experimenting” without mature, problem-specific data architectures – leading to fragile systems that break under scale.

The Cost of Broken Signals

The consequences of weak data integrity ripple through the business:

- Wrong optimization decisions → Campaigns tuned to noise instead of actual performance signals.

- ROI leakage → Budgets wasted due to misdirected spend.

- Erosion of trust → Executives question whether dashboards reflect reality.

- Stalled AI adoption → 80% of AI pilots fail (Harvard Business Review) largely because they’re built on fragile, unreliable data foundations.

This isn’t about lacking data. It’s about not being able to trust the data you already have.

How Agentic AI Changes the Equation

Traditional approaches to data quality rely on manual audits, reactive fixes, and one-off validations. They’re slow, resource-heavy, and rarely keep pace with the complexity of modern data pipelines.

Agentic AI transforms this dynamic by acting as an autonomous guardian of the data ecosystem. Unlike static monitoring, it continuously observes, validates, and corrects issues across the pipeline – without waiting for humans to intervene.

Think of it as a living toolkit that self-heals your measurement backbone.

Agentic AI in Action

Here’s how autonomous agents step in at the most failure-prone steps:

1. Schema Architect

- Suggests the right events, parameters, and taxonomies based on business goals and industry benchmarks.

- Runs automated tag audits to ensure every event is firing correctly.

- Uses auto-capture to detect and log user interactions without relying solely on manual tagging.

Outcome: Fewer missed events, reduced developer dependency, and schemas that stay lean yet comprehensive.

2. AI-powered Tag Auditor

- Continuously validates firing rules across GA4, GTM, and ad platforms.

- Replicates real user journeys to catch hidden errors in event tracking.

- Flags duplicate or unused tags before they bloat your GTM container.

Outcome: Cleaner, conflict-free tags and more trustworthy first-party data at the source.

3. GTM Health Checker

- Monitors schema alignment in real time to prevent drift or anomalies.

- Blocks flawed inputs from contaminating reporting dashboards and ML models.

- Aligns event structures with downstream BI tools for seamless handoffs.

Outcome: Reliable data pipelines that support consistent decision-making and AI accuracy.

4. Data Sanity Automation

- Automates post-deployment GTM audits so issues don’t slip through.

- Runs continuous sanity checks on live data, spotting spikes, gaps, or abnormal patterns the moment they happen.

- Converts one-off audits into permanent safeguards against recurring errors.

Outcome: A self-healing system that keeps data clean, even as your digital ecosystem evolves.

From Fragile Pipelines to Trusted Insights

The difference between broken signals and trusted insights isn’t just about accuracy – it’s about resilience.

With Agentic AI:

- Every step of the data journey is validated and corrected continuously.

- Leaders can operate with confidence in their dashboards.

- Teams spend less time firefighting and more time driving growth.

- AI adoption becomes sustainable because foundations are strong.

Instead of relying on sporadic audits, organizations gain a self-healing, always-on data backbone that keeps insights reliable at scale.

Checklist: Is Your Data Pipeline at Risk?

Ask yourself:

- Are our tags and events validated automatically – or only during campaign launches?

- Do our dashboards reconcile with warehouse numbers consistently?

- How quickly do we detect schema drift or API mismatches?

- Are data quality issues identified in real time – or after budget has already leaked?

- Can our AI initiatives scale without fragile foundations?

If most of these answers aren’t reassuring, your pipeline is already showing cracks.

Closing Takeaway

Data integrity isn’t a one-time checkpoint – it’s a continuous process.

Agentic AI transforms fragile pipelines into resilient, self-healing systems, turning broken signals into trusted insights leaders can act on with confidence.

Don’t let cracks in your data collection slow growth.

Explore how Tatvic’s Agentic AI services can safeguard your data pipeline and unlock reliable AI insights at scale.