Curious how A/B testing in CRO (Conversion Rate Optimization) helps turn everyday user actions into data-driven strategies that boost conversions?

Every Click, Form Submission or Sale on your site tells a story and A/B testing helps you translate those interactions into smarter decisions.

“You can’t improve what you don’t measure.”

~Peter Drucker

In a world where even a 1% lift in conversion can mean thousands of extra dollars, relying on guesswork just doesn’t cut it.

Instead, you need a systematic, repeatable approach to testing, backed by robust AB testing services and the right services partner to guide you.

Whether you’re a marketer, product manager, UX designer, or business owner, this guide will walk you through everything from the basics of ab testing to advanced statistical methods, real‑world case studies, and a step‑by‑step playbook for running your own high‑impact experiments.

Let’s dive in — >>

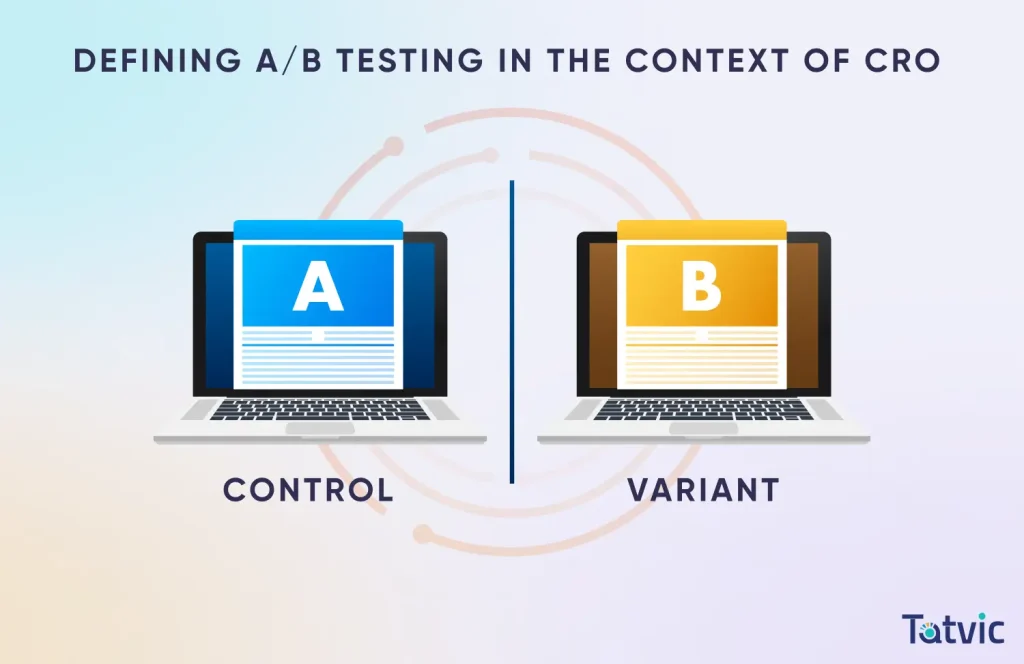

Defining A/B Testing in the Context of CRO (Conversion Rate Optimization)

AB testing, also known as split testing, refers to the process of testing multiple variations of a web page to find higher-performing variations and improve the page’s overall conversion rate.

It is a continuous process that allows you to assess and check the variation that works better for your target audience based on strategic statistical analysis.

At its heart, A/B testing in CRO is a controlled experiment:

- You show Version A (the control) to some users and

- Version B (the variant) to others,

- Then measure which one performs better against your key metric be it Click‑Through Rate (CTR), Form Completion or Purchase Rate.

This isn’t hokey magic; it’s a rigorous approach that turns user behavior into a roadmap for improving your site.

Why A/B Testing Is Essential for CRO Success

A/B testing allows you to experiment with different versions of a webpage to see what resonates most with your audience. It takes the guesswork out of optimization by relying on actual user behavior and performance metrics.

By continuously testing and refining elements like headlines, CTAs, and layouts, you can steadily improve conversion rates.

Ultimately, A/B testing empowers data-backed decision-making that leads to measurable growth in your CRO efforts.

Why invest time and budget in AB testing services??

Because it’s the only way to reliably improve conversions without guesswork.

Here’s How Structured A/B Testing in CRO Fuels Growth:

-

Reduce Bounce Rate:

By experimenting with different headlines, imagery, and calls‑to‑action, you give visitors exactly what they want keeping them on your site longer and reducing bounce.

-

Data-Driven Decisions:

Move beyond gut feelings. Test hypotheses and let real user data guide you. Every test result informs your next move.

-

Understand User Behavior:

Watch how different segments respond. Maybe desktop users love your long‑form copy, but mobile visitors prefer bullet points. AB testing services reveals these nuances.

-

Maximize Existing Traffic:

Instead of pouring more money into ad spend, get more value from the visitors you already have. Even a 5% lift in conversion from AB testing services often outperforms a new ad campaign.

-

Reduce Risk:

Rolling out a site‑wide redesign can backfire. With A/B tests, you can roll out incremental changes, validate them, then apply them broadly minimizing the risk of unexpected drops.

Did you know?

Companies that rigorously use A/B testing grow their revenues 1.5 to 2x faster than those that don’t.

Further, monitoring these changes allows you to have statistically significant and reliable data.

Apart from these, A/B testing also helps in:

- Experimenting with various elements of an app, website, or landing page to boost conversion

- Encouraging continuous improvement in user experience

- Improving user engagement and user navigation

- Improving the checkout experience

- Getting a higher ROI (Return On Investment) without spending

Case Snippet: Titan Eye+

Through A/B testing, Tatvic boosted page views and purchases for Titan Eye+, enhancing their online revenue share. A/B and multivariate testing acted as core pillars of a winning CRO strategy.

A/B Testing in Marketing: Beyond Simple Experiments

A/B testing isn’t just for websites, it’s a universal tool in any digital marketer’s toolbox.

It helps marketers make informed decisions by testing variations in emails, ads, and landing pages to see what performs best.

Whether it’s optimizing subject lines or experimenting with ad creatives, A/B testing brings clarity to campaign performance.

This method reduces assumptions and highlights what truly engages your audience.

When used consistently, it drives smarter strategies and better ROI across all channels.

Here’s how A/B Testing powers different channels:

1. Email Campaigns

- Subject Lines: “20% off today only!” v/s “Your exclusive 20% savings inside.”

- Preview Text: Changing the first line can boost open rates by 5–10%.

- CTA Buttons: Shape, phrasing (“Start Now” v/s “Get Started”), and placement.

2. Landing Pages

- Headline Positioning: Above the fold v/s further down.

- Lead Magnet Format: PDF download v/s video series.

- Form Length: Two fields v/s five fields.

3. Paid Advertisements

- Ad Copy: Highlighting features v/s benefits.

- Creative: Static images v/s short GIFs.

- Ad Format: Carousel v/s single image.

4. In-App Experiences

- Onboarding Flow: Step‑by‑step tutorial vs. “skip” option.

- Feature Prompts: Modal window v/s inline tooltip.

- Pricing Upsells: Hard sell v/s gentle reminder.

A testing tool that supports multi‑channel experiments web, email, ads and in‑app becomes a force multiplier for your overall marketing ROI.

AB testing services become crucial for results that drive business growth.

Types of A/B Tests in CRO (Conversion Rate Optimization) & When to Use Them

Different A/B testing methods serve unique goals in the CRO journey, from simple button color changes to complex multi-page experiments.

Knowing which type to use be it split testing, multivariate, or multi-page testing can greatly influence your outcomes.

Each test provides distinct insights into user behavior and interaction patterns.

By aligning the test type with your specific objective, you can optimize user experience and boost conversions more effectively.

A Deep Dive into the Different Types of A/B Tests Used in CRO

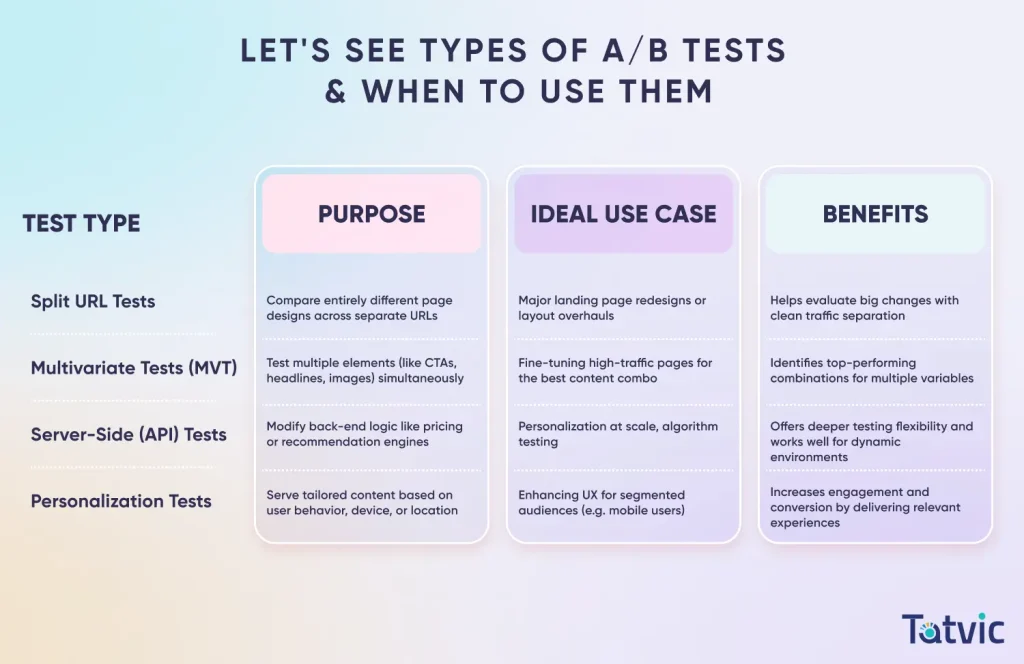

-

Split URL Tests:

Compare entirely different page designs on separate URLs ideal for landing‑page overhauls and commonly run via AB testing services. These tests are perfect for evaluating drastic design changes or entirely new page concepts. By directing traffic to two or more different URLs, businesses can gather clear performance insights. This method reduces bias from minor tweaks and reveals which page structure truly resonates with users. It’s especially effective for landing pages or campaign microsites.

-

Multivariate Tests (MVT):

Test multiple elements (headlines, images, CTAs) at once on a single page to pinpoint the best combination. MVT is ideal when you’re optimizing several page elements simultaneously to see which combinations perform best. It provides deeper insights than simple A/B tests by showing interaction effects between variables. However, it requires a larger traffic volume to generate statistically significant results. Use it when you want fine-tuned control over user experience.

-

Server-Side (API) Tests:

Experiment on backend logic (pricing engines or recommendation modules) for precise control and technical flexibility. These tests go beyond visual changes by focusing on back-end functionalities like algorithmic recommendations or dynamic pricing. Since they run at the server level, they offer faster load times and broader testing capabilities. They’re ideal for more technical teams aiming for deep customization. Server-side testing is great for scaling personalized user journeys.

-

Personalization Tests:

Deliver tailored content to specific user segments (by behavior, device, or source) to boost relevance and conversions. With these, you serve content tailored to unique user attributes—like geography, browsing history, or referral channel. They help deliver a more relevant experience that increases engagement and conversion. Personalization tests are particularly useful for eCommerce and content-heavy platforms. Over time, they help build loyalty by making users feel understood.

Let’s See Types of A/B Tests & When to Use Them:

Note: Multivariate tests require at least 5× the traffic of split tests to reach statistical significance, so plan accordingly.

In practice, you’ll often run multiple test types in parallel using AB testing services that let you manage Split, MVT and Server‑Side Tests from one dashboard.

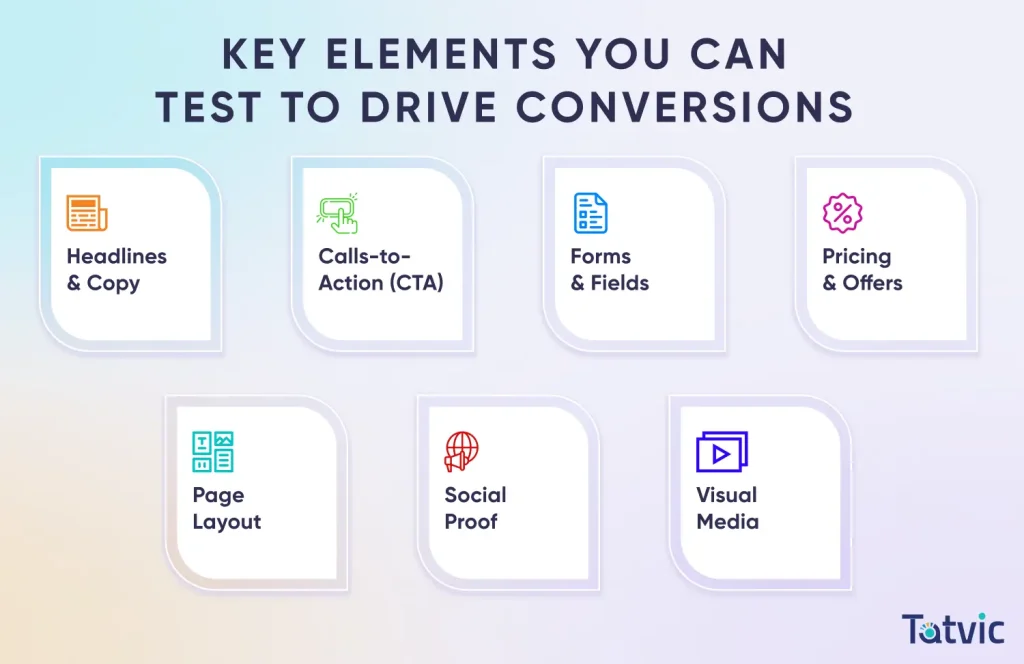

Key Elements You Can Test to Drive Conversions

There are many elements on your website that directly influence a visitor’s decision to convert and A/B testing helps you find which ones matter most.

From headlines and call-to-actions to forms and page layouts, each component plays a role in user experience.

By systematically testing these elements, you can uncover what truly resonates with your audience.

This data-driven approach helps turn insights into higher conversions over time.

Let’s See Few of the Key Elements:

-

Headlines & Copy:

Your headline is the first impression—make sure it clearly states what you offer and why it matters. Testing different tones, lengths, or emotional triggers helps you discover which messaging hooks your audience most effectively.

-

Calls-to-Action (CTA):

A CTA button’s wording, size, and placement guide users toward the next step in their journey. By experimenting with phrasing (“Get Started” vs. “Try for Free”), button styles, and positioning, you’ll learn how to drive more clicks and conversions.

-

Forms & Fields:

Every extra field you ask users to fill feels like friction—fewer inputs usually mean higher completion rates. Test variations in field count, label style (inline vs. above), and real‑time validation to find the sweet spot between data collection and user convenience.

-

Page Layout:

How you arrange text, images, and CTAs can make or break the user flow. Try different column structures, whitespace levels, and navigation placements to see which layout keeps visitors engaged and moving toward your goal.

-

Social Proof:

Trust badges, testimonials, and star ratings reassure visitors that they’re making the right choice. A/B test formats (short quotes vs. full case studies) and placement (inline vs. sidebar) to discover which social proof drives the biggest uplift.

-

Visual Media:

Images, icons, and videos can boost engagement—but only if they’re relevant and high‑quality. Compare static imagery vs. short looping videos or custom illustrations vs. stock photos to learn what best resonates with your audience.

-

Pricing & Offers:

How you present pricing—discount labels, “Most Popular” badges, or countdown timers—can create urgency and clarity. Test different offer structures (percentage off vs. dollar savings) and time‑limited messages to maximize perceived value and drive more purchases. As you incorporate each tweak, your testing backlog grows—fueling future hypotheses and making your CRO program self-sustaining. Leveraging AB testing services ensures that every test is set up correctly, analyzed properly, and iterated on for long-term performance gains.

Statistical Significance: When to Trust Your Results

1. Frequentist Tests

- Long‑Term Observation: Wait until your sample size is large enough (often tens of thousands of views).

- P‑Value Threshold: Commonly 0.05 (95% confidence).

- Pros: Well‑understood, widely supported.

- Cons: Doesn’t let you peek early without risking false positives.

2. Bayesian Tests

- Credible Intervals: Gives the probability one variant outperforms another.

- Flexibility: You can check results mid‑test.

- Pros: Faster insights; easier to interpret probability statements.

- Cons: Requires more statistical sophistication and careful priors.

Quick Checklist for Reliable Tests

- Minimum sample size achieved?

- Run time covers full business cycles (weekends, weekdays)?

- No major traffic source changes (like a viral tweet)?

- One variable changed at a time?

Note: Most AB testing services include built‑in significance calculators. Use them but always double‑check with your own traffic numbers and business context.

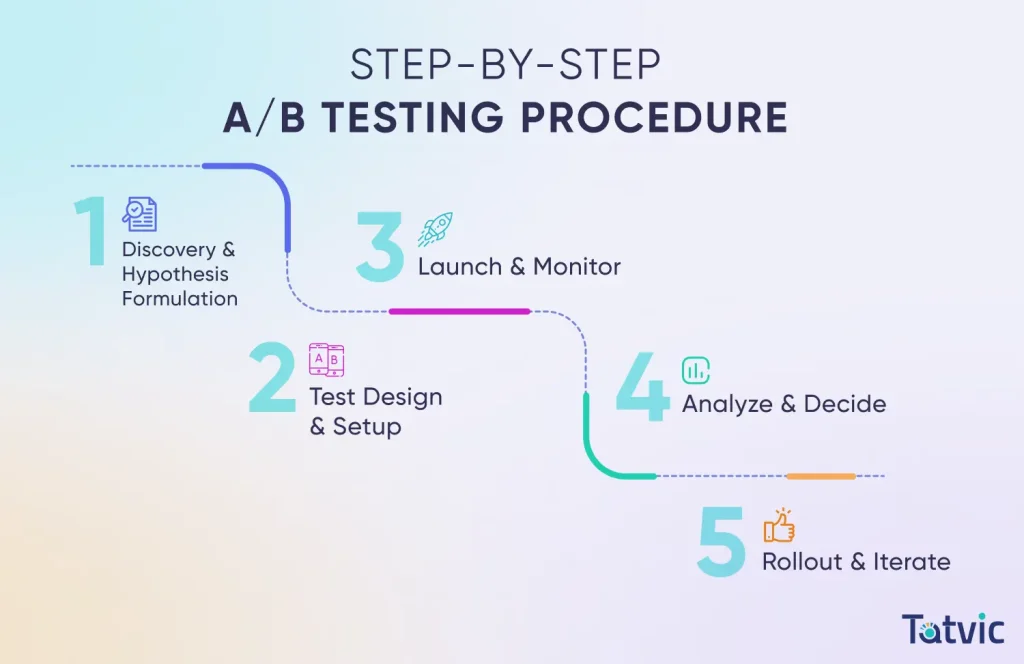

Step-by-Step A/B Testing Procedure To Be Used In CRO

Step 1: Discovery & Hypothesis Formulation

- Audit your analytics: Where are you leaking conversions?

- Gather qualitative inputs: Heatmaps, session recordings, on‑site surveys.

- Craft a clear hypothesis: “If we change the hero image to user‑generated photos, conversions will increase by 10%.”

Step 2: Test Design & Setup

- Choose your test type: split, MVT, or server‑side.

- Build your variants in the testing tool (or ask your AB testing services provider to set it up).

- Define your primary metric (e.g., “add to cart clicks”) and any secondary metrics (time on page, scroll depth).

Step 3: Launch & Monitor

- Start the experiment, directing an equal traffic split to each variant.

- Monitor for technical issues: broken elements, mis‑fires on certain browsers.

- Keep an eye on sample size projections to know when you’ll reach significance.

Step 4: Analyze & Decide

- Let the data settle—avoid early peeking.

- Check overall lift and segment performance (device, geography, traffic channel).

- Decide: implement the winner, declare a tie, or scrap and learn.

Step 5: Rollout & Iterate

- Roll out the winning version sitewide.

- Document results and lessons learned in your test repository.

- Ideate the next test based on these insights—rinse and repeat.

Using professional AB testing services means you get templates, QA scripts, and reporting dashboards that streamline this entire process.

CTA: Book a Free CRO consultation today

Real-World Examples: Case Studies of A/B Testing Driving CRO

Theory is great, but real businesses need real results.

Here are three case studies showing how Tatvic’s AB testing services, powered by best‑in‑class testing tools, turned hypotheses into dollars:

1. A Travel Website saw 30% Uplift in Conversion

Scenario: A leading travel portal faced high drop‑offs on its hotel listing page where users browsed rooms but rarely booked. Analytics and feature‑attribution analysis revealed a lack of urgency as the key barrier.

Approach: Working with Tatvic’s CRO Expert Team , they hypothesized that real‑time scarcity cues would drive bookings. In Variant B set up in our preferred testing tool, they displayed live viewer counts (“X people viewing this hotel”) and a rooms‑remaining indicator. Traffic was split 50/50 to measure impact on booking completions.

Result: The urgency‑driven variant delivered a 30% uplift in completed bookings, directly boosting revenue without any additional ad spend.

2. Online Marketplace Appointment Simplification: 70% More Lead Generation

Scenario: A large used‑car marketplace struggled to convert homepage visitors into appointment bookings. Multi‑stage navigation was causing friction, and only a small fraction of users completed the form.

Approach: Using Tatvic’s AB testing services and comprehensive Google Analytics tracking, they identified the cumbersome navigation as the culprit. Variant B replaced it with a single‐page lead‑gen form headlined “Sell Your Used Car in 30 Minutes,” and swapped out fields (city, date, time, mobile number) for previously under‑utilized inputs. The experiment ran via our testing tool with an equal traffic split.

Result: After two weeks, the desktop variant generated 66% more leads, and the mobile variant saw a 70% increase in leads, validating the simplified workflow.

3. “Book A Test Ride” Form Redesign: 93% increase in leads

Scenario: An Indian multinational motorcycle manufacturer wanted to boost submissions on its “Book Test Ride” form but saw low engagement despite healthy traffic.

Approach: Leveraging Tatvic’s AB testing services and form‑analytics data, they redesigned the form for Variant B, adding a floating CTA on the homepage and reducing the number of fields while reinforcing the call‑to‑action. The new design was implemented in their testing tool and tested across desktop and mobile.

Result: Over 27 days, the desktop variant drove a 93% increase in leads, and the mobile variant delivered a 44% lift, turning the Test Ride form into a top‑performing conversion funnel.

Key Takeaway: In each scenario, targeted experiments backed by Tatvic’s AB testing services and advanced testing tool setups, transformed user insights into significant conversion gains. Continuous testing and iteration are the engines of sustainable growth.

A/B Testing as Part of a Holistic CRO (Conversion Rate Optimization) Strategy

A/B tests are powerful, but they shouldn’t operate in a vacuum. The most successful programs integrate AB testing services into a broader CRO ecosystem to ensure your experiments are meaningful and aligned with larger goals.

- User Research & Personas: Develop empathy for your users. Surveys, interviews, and analytics paint the “who” behind the clicks. AB testing services often use these insights to guide what to test and why.

- UX Audits & Heuristic Reviews: Experts identify friction points and your test hypotheses often come from these reviews.

- Qualitative Feedback: On‑site polls, chat transcripts, and session replays reveal why users behave the way they do.

- Cross-Functional Collaboration: Marketing, design, analytics and development must align around your test roadmap and share ownership of results.

By embedding testing within continuous user research and cross-team workflows, you ensure each experiment builds on the last—creating a virtuous cycle of insights and optimizations.

AB testing services that align with your team structure and user data can accelerate your path to impactful, sustained conversion gains.

Best Practices and Common Pitfalls to Avoid

All too often, teams launch A/B testing services only to see disappointing results, these are usually because they skipped some fundamentals.

Best Practices to be followed while implementing A/B Tests in CRO

- One Change at a Time: Keep your hypotheses clean by isolating variables.

- Sufficient Traffic & Duration: Wait until you hit both your sample size and run the test through a full weekly cycle.

- Thorough QA: Preview variants on multiple devices, browsers, and user states (logged in v/s guest).

- Document Everything: Maintain a living backlog of tests your institutional memory for what worked, what didn’t and why.

Avoiding Common Pitfalls in A/B Tests for CRO Success

- Stopping Early: A small early uplift can evaporate with more data—never call a winner too soon.

- Over-Testing: Too many concurrent experiments can cause interaction effects and muddy results.

- Ignoring Segments: A 10% overall lift might hide that mobile users saw no benefit or even a drop-in conversion.

- Neglecting Follow-Up: Negative or inconclusive results are still valuable insights. Capture them before moving on.

Warning: Skipping proper QA and statistical checks can turn every experiment into a potential liability rather than a learning opportunity.

Conclusion: Making A/B Testing in CRO Your Growth Engine

A/B testing is more than just a tool in your CRO toolbox, it’s the very engine that powers continuous, data‑driven growth.

When you pair strategic testing with specialized AB testing services, you:

- Reduce Risks: Incremental rollouts vs. big‑bang redesigns.

- Uncover Deep Insights: Understand what truly moves the needle for your users.

- Iterate Quickly: Establish a cadence of rapid experiments and quick wins.

- Scale Sustainably: Embed testing within a culture of experimentation and cross‑team collaboration.

At Tatvic, our AB testing services blend technical expertise, behavioral science, and hands-on support to guide you every step of the way from hypothesis generation to test setup, analysis, and rollout.

We tailor our services to your business objectives, ensuring every experiment aligns with your brand voice and long‑term goals.

Ready to put your CRO on autopilot?

Contact us today to kick off your first experiment and discover the true power of data-driven optimization.